In a world where “seeing is believing” no longer holds true, India is finally stepping in. The Ministry of Electronics and Information technology (MeitY) recently proposed amendments to the IT Rules 2021 to tackle the fast-spreading menace of deepfake content on the web. The idea is clear and simple: make the internet more safe, trusted, and accountable. But as is the story with most policies in regulating technology, the story is layered in bureaucracy.

Commonly being referred to as India’s deepfake laws, the new rules are expected to bring relief from rampant misinformation and AI-generated chaos. Yet, they could also open new concerns around surveillance, compliance, and creative freedom. Here’s a closer look at five reasons why India’s amendments in IT laws can save us, along with areas where they might possibly fail.

Where the Law Draws its Strongest Lines

1. A Clear Definition, Finally

For the first time, India will have a legal definition of synthetically generated information. This will include any text, image, video, or multimedia created or modified using AI or computer algorithms. This is huge step, as until now, deepfakes fell under the grey area, difficult to classify under the radar of the law. By naming and defining the threat, the government is setting a strong foundation for accountability.

2. Label AI Generated Content Clearly

The new rules mandate that AI generated content must carry visible labels or metadata identifiers, that clearly distinguish them from regular media. This label should cover atleast 10% of a video’s surface area or 10% if its an audio clip. The objective is to make AI-generated media instantly recognisable, reducing the risk of people mistaking fakes as real images.

Imagine scrolling through social media and knowing with a glance whether the content you are viewing if genuine or machine-made. This kind of transparency will bring a positive change in the way we consume information.

Also Read: China Enforces Strict AI Content Labelling To Combat Misinformation, Fraud

3. Accountability for Big Platforms

The government will require social media intermediaries (SMI) like Meta, YouTube, X (formerly Twitter) and others to verify and flag synthetic and AI-generated content on their respective platforms. They will be legally required to obtain user declarations whether the uploaded media is AI-generated, and verify it by using strong technical instruments. This move aims to stop social media platforms from shrugging away responsibility when a deepfake clip goes viral.

4. Legal Protection for the Right Action

Removing content from any social media is always controversial as it has to justify the fine line between regulations and censorship. The new proposed have a provision that if a platform deletes any content with reasonable suspicion, they will have legal immunity from getting sued for censorship. It aims to promote social media sites in proactively working against deepfake content in good faith, to control their spread.

5. Empowering Users and Restoring Trust

This could be perhaps the biggest win from the new IT Rules. People are losing trust on what they see on the internet, as more people are getting aware of deepfakes. From political speeches to celebrity endorsements, almost anything can be faked now to spread a propoganda, moving users away from trusting online media. Stricter regulations can restore the fait in the social media system, and maintain a sense of harmony.

Also Read: How ChatGPT Could Play a Role in Fighting Deepfakes

The Flip Side: Where the Law Falters

1. Detection is Harder Than Definition

Defining AI-generated media is one thing, and detecting it is another. Deepfake technology evolves at a lightning speed, often outracing detection tools., Even the best AI detectors today struggle with subtle and realistic-looking deepfakes. Without advanced technical infrastructure or collaboration with global AI entities, it will be difficult for the government to regulate and identify fake content within its judicial powers. This could make the laws more symbolic on paper instead of effective.

2. Compliance Burdens on Platforms

While global media giants like Meta, YouTube, X, and others can handle these regulations with ease, smaller and emerging platforms like Arrattai by Zoho can find compliances costly and complex. Embedding metadata, verifying user declarations, maintaining proper pipelines for moderations requires a stong engineering backend and resources. This risk of over-regulation can hold back India’s innovation, something which we saw with the restrictions on cryptocurrency where enthusiasts shifted to the UAE and other overseas destinations for the same reason.

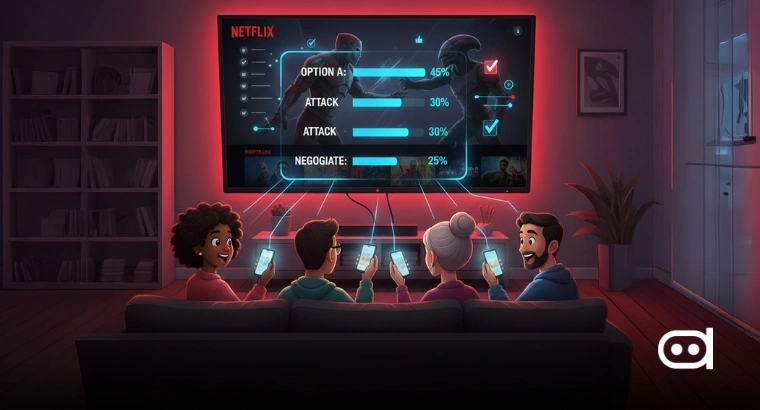

3. Effects on Creative Expressions

Not all AI-generated content is harmful. Many creators and media houses are using AI to create digital avatars and animations to present information in a better way to their audience. However, if such synthetic media gets flagged regularly, then creators may restrict themselves from using such methods, holding back their creativity. The aim of the rules is to adapt AI in a safe manner, and not to drive it away.

4. Enforcement Irregularities

The proposed IT rules assign huge responsibilities to the social media platforms, but they don’t specify clear enforcement mechanisms. It’s one thing to identify something as per the legal definition, the problem lies in taking adequate action on it. AI is a vast topic, and its not possible to mention everything in the legal books, leaving a large void of loopholes which can be potentially exploited.

5. Privacy and Surveillance Concerns

Metadata tagging adds traceability, which raises a major privacy concern. In order to detect AI-generated content, social media platforms will have to collect additional information in their undertakings which can be traced back to the original poster. This opens doors to surveillance risks, where private information can be exposed or misused by entities. The government and the social media apps will run together on a fine line between transparency and privacy, which is risky if crossed on either ends.

The Bigger Picture

India’s move in improvising the IT laws reflects a global awakening of the dangers of deepfakes. The United States and the United Kingdom are already pushing for ways to watermark AI-generated content, especially because of altered footages of politicians and notable celebrities.

If these laws are implemented well, then it will help India to set an example to the world on how to govern AI-generated content within the rights to freedom of speech. It will not just make social media clean, but help the internet become a safer place to be on.