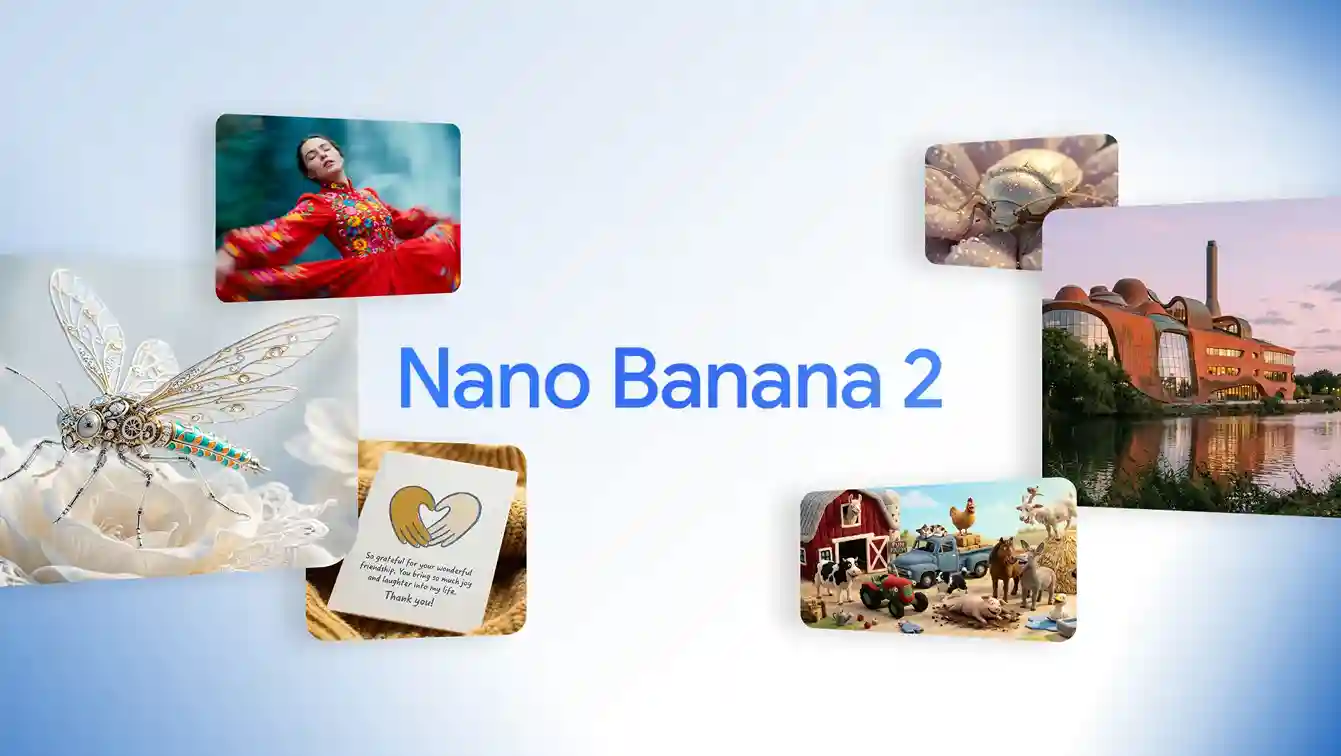

Key Highlights –

- Google’s Nano Banana is an update to Gemini’s native image editing features.

- Earlier image editing results within the Gemini app lacked finesse, which is now notably improved with this update.

- The Nano Banana update lets users combine two separate images for a seamless composition.

Google CEO Sundar Pichai tweeted “three bananas” emojis on August 26, putting all Nano Banana rumours to an end. Soon after, the tech giant officially introduced the update to its users, letting them edit and modify photos with a single prompt.

🍌🍌🍌

— Sundar Pichai (@sundarpichai) August 26, 2025

In an official blog ‘Gemini Nano Banana‘, Google revealed that the new image editing model is from Google DeepMind, and cited how it is the top-rated image editing model in the world (followed by Flux models, then ChatGPT). To make accessing the model easier for users, Google has integrated it directly into the Gemini app.

How to Use Google’s Nano Banana AI model?

Google has clearly stated both paid and unpaid users can try this updated image editing feature in the Gemini app. To begin, first go to the official Gemini website linked here. Then, upload the image you want to edit (you can upload two if you want to combine them). Type the prompt for example, “Create an image where the woman in the photo is cuddling the dog on a basketball court.” You’ll receive the result as shown below.

The update also lets its users freely add/remove onto any edits one makes while preserving the rest. Another example which could be used for interior designing or visualisation can be seen below.

Users can also use any image to refer it as colour picker and apply it to another. According to the blog, users can “..take the colour and texture of flower petals and apply it to a pair of rainboots, or design a dress using the pattern from a butterfly’s wings.”

Our image editing model is now rolling out in @Geminiapp – and yes, it’s 🍌🍌. Top of @lmarena’s image edit leaderboard, it’s especially good at maintaining likeness across different contexts. Check out a few of my dog Jeffree in honor of International Dog Day – though don’t let… pic.twitter.com/8Y45DawZBc

— Sundar Pichai (@sundarpichai) August 26, 2025

To practice fair use of AI, Google ensured that all the images generated using Gemini would include a visible watermark, as well as their own invisible SynthID digital watermark. This would help in identification of AI generated content as well as practise transparency in the internet.

Google’s Latest Efforts AI Race

Apart from sharing his images of his dog with the new Nano-Banana update, Pichai also shared his that the tech giant will be investing 9 billion U.S. dollars in Virginia for AI and cloud infrastructure.

We’re investing $9B in Virginia through 2026 for AI and cloud infrastructure in the state. This will go towards building a new data center in Chesterfield County and expanding our existing facilities in Loudoun and Prince William Counties. https://t.co/bR6CycHCr0

— Sundar Pichai (@sundarpichai) August 27, 2025

Google also introduced a new AI powered live translation update on the Google Translate app. With this update, the app can now translate over 70 lanugages with ease including Arabic, French, Hindi, Korean, Spanish and Tamil. This feature is available on both Android and iOS apps.

Another addon to the app is their new language practice feature. Google Translate will now create “tailored listening and speaking practice sessions” for its users. Google does seem to jump a little late into the language learning domain, considering how already existing languages app such as Duolingo are dominating it.

Recently Google also added over 80 languages support for its research and learning AI tool, NotebookLM.