Key Highlights:

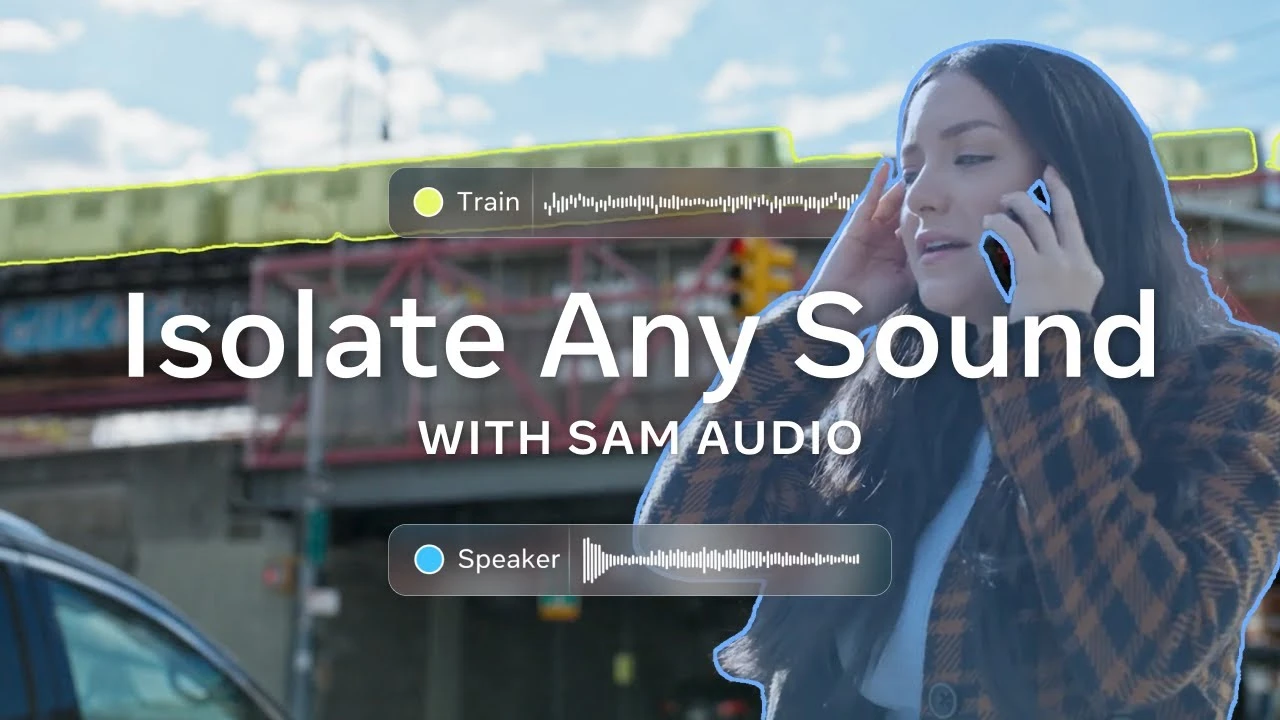

- Meta has launched SAM Audio, a new AI model that helps you isolte audio from any messy, real-life recording.

- There are three prompting types you can use: text, visual, and span, which Meta calls an “industry-first.” One model, many use cases, with

- Meta positions SAM Audio as a unified alternative to fragmented audio tools. The AI model is now live in the Segment Anything Playground and available for hands-on testing or download.

Although Meta might not be making progress with its Llama Large Language Model (LLM), there is something it’s working on and is quite serious about. Here, I’m referring to Meta’s Segment Anything family. Speaking of which, the company has unveiled SAM Audio. That’s a new AI model that promises to make sound separation feel almost as intuitive as pointing at something on a screen and saying, “That, I want that.”

Meta’s SAM Audio model can isolate audio using three different kind of prompts

At its core, SAM Audio is designed to isolate specific audio elements from messy, real-world recordings. Imagine traffic noise disrupting a street interview, or a barking dog interrupting a podcast, or background chatter killing the vocals from your favorite artists’ concert. SAM Audio can do it all, without requiring you to edit them out using separate tools. Not to forget, this launch comes weeks after Meta announced SAM 3 & SAM 3D models for smarter image & 3D editing.

What makes SAM Audio stand out is how you tell it what you want. Meta says the model supports three different prompt types, which can be used alone or combined. The first and very obvious is text prompts. You can literally type something like “dog barking” or “singing voice,” and the model will attempt to extract just that sound from the mix — amazing, right?

Next, you’ve visual prompts, which you can use to click on the person or object producing a sound. After you’re done, SAM Audio will isolate the corresponding audio — it’s that simple. Meanwhile, the third option is span prompting. This is where things actually get interesting, Meta even goes onto call it an industry first.

What’s interesting is that instead of describing or pointing, you can mark a specific time range where the target sound occurs. This way, you can have precise control over at what time audio has to be pulled or removed. Putting it simply, it could be a big deal for creators involved in long recordings like podcasts or research footage.

You may also like: Adobe Firefly gets smarter AI video tools and unlimited generations for limited-time

The AI model can be handy in multiple industries

SAM Audio is looking to tap the market filled with tools that have narrow use cases. For example, you might need separate tools for voice isolation and noise reduction, you get it, right? SAM Audio, in contrast, looks to position itself as a single, unified model that performs well across a wide range of real-world scenarios.

Speaking of that, the AI model can be super handy in music production, podcast editing,and much more. Meta also highlights its potential application in the film/television industry, and even scientific research. In short, wherever there’s a need to isolate sound, SAM Audio fits in.

You may also like: Meta’s upcoming ‘Avocado’ AI model could be a part of effort to rebalance its open-source vision

Developers can download this model right away

SAM Audio is available now via the Segment Anything Playground. For those unaware, that’s a new platform where users can test Meta’s latest models. You can experiment using provided audio and video assets or upload your own files to see how the model handles them. For developers and researchers, Meta is also making the model available to download.