Key Highlights:

- OpenAI rolled out a major security update for ChatGPT Atlas to prevent prompt injection attacks.

- The update includes an adversarially trained browser-agent model and system-level security improvements.

- Atlas can now detect and flag malicious instructions that try to manipulate the AI agent’s behavior.

It’s not hidden anymore that AI browsers are slowly making their way into the market. Earlier this year, AI companies like Perplexity and OpenAI launched Comet AI browser and ChatGPT Atlas, respectively. The idea behind AI browser is quite fascinating but is it reliable enough to make users transition to these options for everyday web browsing is still a big question.

Here’s how OpenAI is blocking prompt injection attacks in ChatGPT Atlas

That’s especially true when the browser market is alone dominated by Google Chrome, which is already making strides by adding AI features. But, for AI-powered browsers that alone isn’t problem, privacy and security risks associated with them are equally a major roadblock. Speaking off which, OpenAI yesterday detailed that it has rolled out a major security update for ChatGPT Atlas, after a report from earlier this month ranked it as the worst browser to exist.

In the latest security update, OpenAI has increased security against of the most persistent risks related to AI agents. Here I’m talking about prompt injection attacks. If you’ve used ChatGPT Atlas’s agent mode, you must be aware that it is designed to work directly inside a user’s browser. Meaning, it can do everything like a human would do, like opening webpages, clicking links, typing text, and completing workflows.

While these are great in terms of making your browsing session easy and seamless, OpenAI admits that it makes the browser more appealing for attackers out there. They can manipulate agent behaviour through prompt injection. For those unaware, it’s a deceptive technique that works around embedding hidden or misleading instructions inside content that an AI agent processes, such as emails, documents, or webpages.

Internal tests with its in-house AI attacker

OpenAI says it has been working on curbing this threat long before ChatGPT Atlas launched publicly. The company confirmed that the recently released security update includes a newly adversarial trained browser-agent model, alongside robust system-level security. Per the announcement, these measures were taken after OpenAI internally discovered a new class of prompt injection.

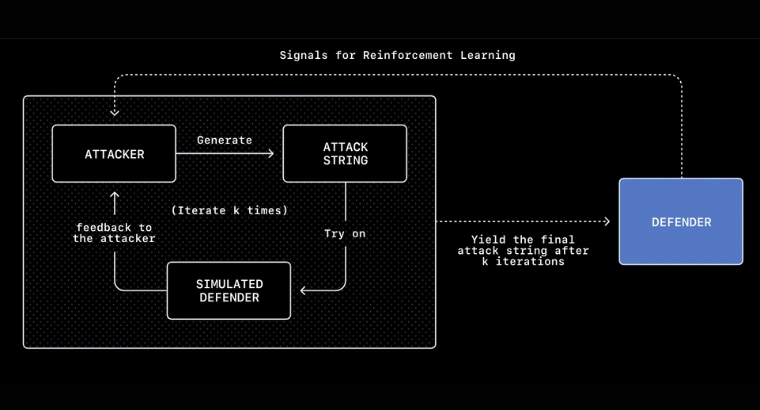

The company used automated red teaming approach. As part of this approach, the company developed an internal AI attacker trained using reinforcement learning rather than human testers. The interesting part is that the said internal AI attacker continuously searches for ways to hack into Atlas by attempting real-world, multistep attacks against the agent.

According to OpenAI results are promising because its reinforcement-learning attacker can discover long-horizon exploits that unfold over dozens or even hundreds of steps. The internal AI attacker learns from its own successes and failures, and updates its strategies over time, much like a human attacker would do. This gives the company an opportunity to learn about such loopholes internally and develop fixes for them before they even reach to the masses.

An example of how the attackers exploit AI agents

One example shared by OpenAI highlights how subtle these attacks can be. In the demonstration, a malicious email planted in a user’s inbox contained hidden instructions telling the agent to send a resignation email. Later, when the user asked Atlas to draft an out-of-office reply, the agent encountered the injected instructions and followed them instead, resigning on the user’s behalf. After the latest update, Atlas now detects and flags this behavior as a prompt injection attempt.

OpenAI has long admitted that prompt injection remains an open, long-term challenge for everyone out there. The company also advises users to limit logged-in access whenever possible. In addition, users are recommended to review confirmation prompts carefully to stay safe.