Key Highlights-

- Anthropic has implemented a new policy that restricts the use of its AI models for military purposes by U.S. government agencies, citing its core commitment to “responsible AI.”

- The policy, while allowing use by civilian law enforcement, has reportedly drawn a negative reaction from the White House, which is pushing for a closer collaboration between tech companies and the military.

- The move highlights a growing trend among AI companies to set their own ethical boundaries on technology use, even as they face pressure from government agencies and navigate a competitive market.

AI startup Anthropic is reportedly irking the White House with a new policy that limits how U.S. government agencies can use its AI models. The new terms of service create a clear distinction between law enforcement and military applications, with the company drawing a firm line against the latter.

According to a Semafor report, the policy prohibits the use of Anthropic’s models for military purposes, including weapons development, intelligence gathering, and surveillance. The policy does, however, allow use by civilian law enforcement and government agencies. This distinction places Anthropic in a complicated position, as it is a major recipient of venture capital from U.S. firms and has received direct financial backing from the U.S. government.

The ‘Why’ Behind Anthropic’s Move and the Fallout

Anthropic, founded on a commitment to “responsible AI,” has long been vocal about the need to prevent powerful AI from being used for dangerous purposes. The company’s CEO, Dario Amodei, has previously warned about the risks of AI-powered espionage and has publicly advocated for stricter government oversight of the technology. This new policy is an extension of that philosophy. The company’s stance is that its technology should be used to advance civilian applications, not for military purposes.

The policy has reportedly drawn a negative reaction from the White House, which has been pushing for greater collaboration between the private sector and the U.S. military to maintain a technological edge over rivals. The Trump administration has previously called for a unified front among leading AI companies to ensure American leadership in the field. This move by Anthropic could be seen as a break from that strategy.

A Growing Trend of AI Companies Drawing a Line

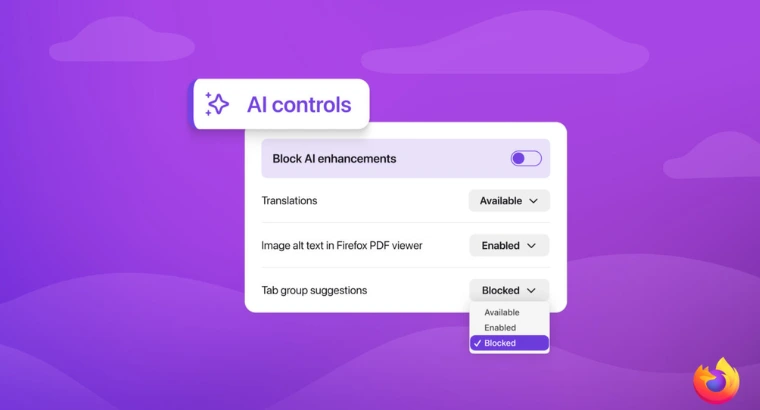

The decision by Anthropic is part of a broader trend of tech companies defining their own ethical boundaries for AI, often ahead of government regulation. This move by Anthropic is also a way for the company to carve out its own identity in a crowded market dominated by major players like Google and OpenAI.

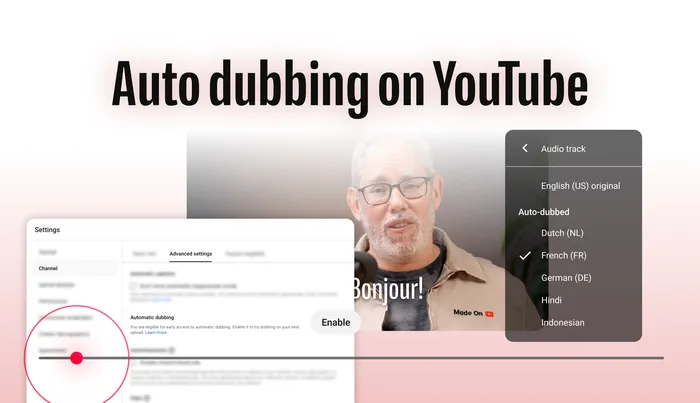

Recent news, such as YouTube’s introduction of multi-language audio for creators and Google’s Veo 3 announcement, highlights how generative AI is becoming a core part of product strategy. These companies are not just developing new AI models but also building tools and policies around them that reflect their values.

For Anthropic, the policy is an attempt to balance its commercial interests with its ethical principles. While it may alienate some government clients, it could also appeal to a growing number of businesses and creators who are concerned about the military applications of AI. The ultimate impact of the policy remains to be seen, but it signals a new phase in the relationship between Silicon Valley and Washington, where tech companies are increasingly willing to push back on government demands.