Key Highlights

- The decision to open-source its AI models will build trust and enhance a developer ecosystem

- Elon Musk also revealed his plan to scale computing power to 50 million H100 equivalents within 5 years

- xAI files new Macrohard trademark

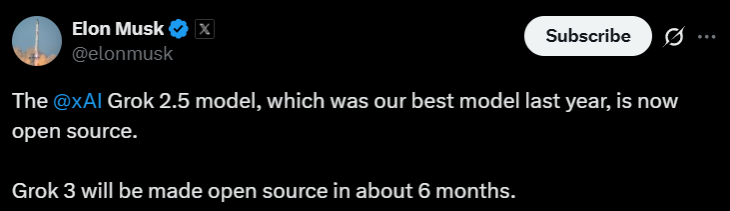

Tech mogul Elon Musk revealed his future plans for an artificial intelligence (AI) venture, xAI, in which he officially disclosed that last year’s “best model”, Grok 2.5, is now open-source.

Not only Grok 2.5, but he also mentioned that Grok 3 will be made open source in about 6 months.

(Source: Elon Musk on X)

On August 24, Elon Musk shared a series of posts on X (formerly Twitter), where he also disclosed timelines for upcoming launches and a roadmap for infrastructure goals to establish xAI as a leader in the AI race. In his latest posts, he shed light on the key differentiator, which is computing power.

Open-Sourcing Grok Models

A major update of Elon Musk’s revelation was xAI’s decision to open-source Grok 2.5. Musk confirmed that both the model’s code and weights are now freely available to developers and researchers via repositories like Hugging Face, under the Grok 2 Community License Agreement. It will allow external parties to inspect the architecture and develop new AI-based applications.

He also described Grok 3 as “an order of magnitude more capable than Grok 2,” calling it the “smartest AI on Earth.” The muscle behind this brain is xAI’s new supercomputer, Colossus. This machine is a beast in a true manner, which offers ten times the computing power of its predecessor.

Is Open-Sourcing a Good Idea for xAI?

The decision to make previous models open-source comes ahead of the preparation to launch Grok 5. By giving away its crown jewels, xAI wants to build trust and attract the brightest minds from startups and universities to create a community around its technology.

The thought behind it is that more eyes on the code will lead to better, safer, and more robust AI.

However, this approach is a double-edged sword. While community scrutiny can enhance safety, it also allows competitors to replicate and even enhance xAI’s technology.

For now, though, Grok 4 remains in the walled garden for paying subscribers, with no specific timeline for its own open-source release.

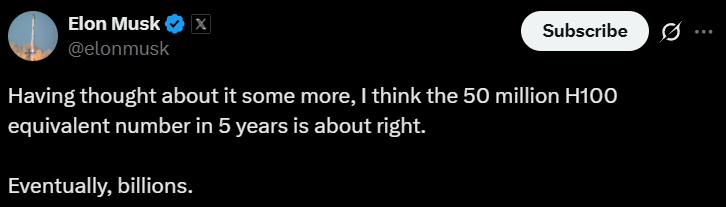

Musk’s Plan to Enhance xAI with 50M NVIDIA H100 Chips

(Source: Elon Musk on XElon Musk xAI)

Musk has also revealed his plan to operate the equivalent of 50 million NVIDIA H100 chips within the next five years, with massive computing power at xAI. Currently, the company’s massive Colossus data center in Memphis runs on about 200,000 of these chips. Even major players like Meta only aim for 600,000. It means xAI is planning to kill the competition through sheer scale.

“xAI will soon be far beyond any company besides Google, then significantly exceed Google,” Elon Musk stated in the post on X. OpenAI is also planning the same, according to the latest report.

Like others, xAI is also developing its own indigenous chips to end its dependence on Nvidia.

He also highlighted China’s dominance in the AI sector, saying, “Companies in China will be the toughest competitors, because they have so much more electricity than America and are super strong at building hardware.”

xAI’s New Trademark ‘Macrohard’

Elon Musk has also articulated an impressive development plan for consumer technology, wherein personal devices such as smartphones and laptops will turn into what he called “edge nodes for AI inference.” This model transforms models from current cloud-based processes to localized AI computation directly on the device.

These details come after “Macrohard”, the latest trademark. While the name reflects a facetious homage, it is conceived as a fully automated software company, which uses all essential functions including coding, debugging, testing, and systems management.

Hosted on the immense Colossus 2 supercomputer, this project serves as a large-scale experiment into the feasibility of AI self-governance in complex business contexts.

“Devices will just be edge nodes for AI inference, as bandwidth limitations prevent everything from being done server-side,” Elon Musk stated in the said.